How is Facebook Content Moderated?

The Internet is full of offensive content, and social media is no different. Facebook is one of many social networks that tries to clean up our newsfeeds so we can enjoy baby photos and funny videos without having to filter though the scariest the web has to offer.

Source: WhoIsHostingThis

Related Infographics:

- Optimal Image sizes to Share on Social Media

- 5 Tips to Create a better Instagram Feed

- How to Divide and Conquer on Linked

Infographic Summary:

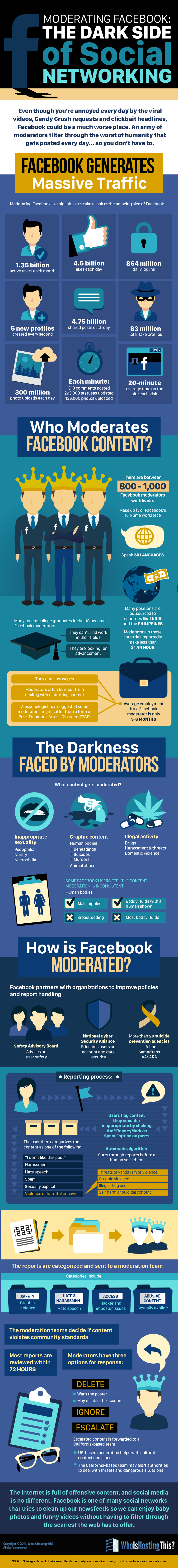

Even thought you’re annoyed ever day by the viral videos, Candy Crush requests and clickbait headlines, Facebook could be a much worse place. An army of moderators filter through the worst of humanity that gets posted every day… so you don’t have to.

Facebook Traffic Stats

- 1.35 Billion active users each month

- 4.5 billion likes each day

- 864 million daily log ins

- 5 new profiles created every second

- 4.75 billion shared posts each day

- 83 million total fake profiles

- 300 million photo uploads each day

- 510 comments, 293k status updates, 136k photos uploaded per minute

- 20 minute average time on the site each visit

Who moderates the Facebook content?

It is estimated that there are between 800-1000 full time Facebook moderators globally making up a third of Facebook’s total workforce. Many of these positions are outsourced to countries such as India or the Philippines and these employees reportedly make less than $1 an hour.

Many recent college graduates in the United states become moderators on Facebook. This is because they can’t find work in their respective fields or they are looking for career advancement opportunities. Being a facebook moderator is tough work. They earn low wages, burnout from dealing with disturbing content and may even form Post-Traumatic Stress Disorder. (PTSD) The average employment for a Facebook moderator is only about 3-6 months.

How exactly is Facebook moderated?

Facebook partners with organizations to improve policies and report handling. A safety Advisory Board advises on user safety, the National Cyber Security Alliance educates users on account and data security and more than 20 suicide prevention agencies moderate.

The reporting Process

- Users flag content they consider inappropriate by clicking the report/mark as spam option on posts. An automatic algorithm sorts through reports before human eyes see it.

- The user categorizes the inappropriate content

- The reports are categorized and sent to a team of moderators.

- The moderation teams decide if content violates Facebook terms and conditions

- The moderate has 3 options – Delete, Ignore, Escalate